Un-needed WU???

28 Mar 2008 15:50:59 UTC

Topic 193593

(moderation:

I have a WU 37543742 where I'm the fourth user to receive the first WU.

The first one errored out and it was sent out again.

Both other results have been returned and validated successfully.

The only reason I can think of why it should be sent to me was that one of the other two was overdue and I was sent a copy of it.

As this WU hasn't started crunching yet, should I abort it before it starts?

Language

Copyright © 2024 Einstein@Home. All rights reserved.

Un-needed WU???

)

There is no real point in running it, so I would abort it. However you should still get credit if you do decide to process it.

BOINC WIKI

BOINCing since 2002/12/8

RE: The only reason I can

)

True, one was a few hours past deadline when your copy was sent. But that host replied before yours, so even though late, was checked. Since it validated, that host got credit.

I think that would be harmless--I don't think another copy will be sent out. It would divert a few watt-hours from redundant to more potentially useful computation.

Thought it was the right

)

Thought it was the right thing to do.

Will abort and get a fresh WU to crunch

RE: Thought it was the

)

Yes, very well spotted!

In cases like this, the project is much better served by your aborting of the redundant work and getting a fresh replacement. Your daily limit will temporarily reduce by 1 but will be fully restored as soon as your current result is completed.

Cheers,

Gary.

Well I tend to check on Boinc

)

Well I tend to check on Boinc from time to time and when my WU doesn't end in 0 or 1 I check them out.

Hmmm... I wonder if they

)

Hmmm...

I wonder if they have a new enough server package to just turn on '221' functionality.

If so, then you wouldn't have to do anything since the project would issue an abort due to redundant command to the host if the task hadn't been started yet in this situation.

Alinator

RE: Hmmm... I wonder if

)

I had held off commenting, but your post pushed me over the edge... LOL...

Bear in mind that the BOINC version would have to support those 221 messages... Mine (5.8.16) doesn't... Oh, and Jord, if you're lurking about, yes, I know it is an antiquated version... :-P

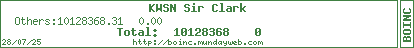

Additionally, this situation turned out MUCH BETTER for the project as a whole instead of what used to happen more frequently with the older 14-day deadlines. The late host came in just a few hours after the deadline. I don't know for sure if the cpu scheduler would've caused the same thing to happen with the 14-day deadline, but those extra 4 days probably allowed for less "EDF" / "High Priority" time than the 14-day deadline would've...so there's a reduction in deadline pressure and less cause for itchy participants to start ranting about how "Einstein is HOGGING MY CPU!!!"...which is a good thing... :-) If the person really couldn't have completed it by day 14, then based on the speed of Clark's system, they may have not gotten anything for it at all, because Clark may have completed the reissue and reported it back before the original host was able to complete and report...

So, again, thanks to Bruce and Bernd for extending out to 18 days...

RE: I had held off

)

LOL...

Yep, I sure was glad to see a little more slack built in to the EAH deadlines for the higher template frequencies.

As far as 5.8.16 being antiquated, I counter that with depending on your host OS, it might be the only game in town! :-(

Also since you mentioned it, this seems to indicate the project is still sending work to hosts which are on the hairy edge of being able to make the deadline, even if they ran EAH 24/7 alone. I know I was having this problem with my slower slugs earlier on in S5R2, but I moved them over to Leiden a while back so I can't say for sure what the situation is today. I don't think the extra slack would help much in that regard, but that's a 'from the hip' evaluation. I guess I'll find out for sure when they either pull a task from EAH on share legitimately, or they have to pull as backup work.

Concerning EAH and EDF... Let's see if I can guess the scenario.

Participant sets their host up to run 'Whatever'@home with EAH as a backup project, mostly because you can count on it being there 24/7 when you need a task to do, and configure it with a Resource Share of 99.999 to 0.001% WAH/EAH, and then wonder why EAH is 'hogging' the CPU when the task is a few days away from deadline! ;-)

Alinator