Can't parse scheduler reply

26 Feb 2005 14:57:51 UTC

Topic 188042

(moderation:

Just recently I've been getting

SCHEDULER_REPLY::parse(): bad first tag content type:text/plain

Can't parse scheduler reply

When I've seen this before it's been down to the database connection limit being exceeded, but the scheduler sends a malformed message to this effect.

@Admins: can you fix the scheduler so it sends a properly constructed response?

Does E@H need more processing power already? (all the other projects have found they do!)

Language

Copyright © 2024 Einstein@Home. All rights reserved.

Can't parse scheduler reply

)

Can you tell the exact time of this (preferrable UTC or give you timezone)? We found the server haltet this morning for no reason we could find yet. Apparently the WU generator got a damage which might be the reason for this. Bruce is working on it right now.

Bm

BM

>>Can you tell the exact time

)

>>Can you tell the exact time of this (preferrable UTC or give you timezone)?

It would have been about five minutes before I posted the message: 14:50 UTC, or thereabouts.

Thanks. Watch the front page

)

Thanks. Watch the front page for news on this.

BM

BM

> Just recently I've been

)

> Just recently I've been getting

>

> SCHEDULER_REPLY::parse(): bad first tag content type:text/plain

> Can't parse scheduler reply

>

> When I've seen this before it's been down to the database connection limit

> being exceeded, but the scheduler sends a malformed message to this effect.

This is exactly what happened: we exceeded the DB connection limit.

> @Admins: can you fix the scheduler so it sends a properly constructed

> response?

Yes! I'll send you an email: please send me the sched_reply.xml file.

> Does E@H need more processing power already? (all the other projects have

> found they do!)

So far our system load averages about 5%. So we don't think so.

Bruce

Director, Einstein@Home

I can't send the actual

)

I can't send the actual sched_reply.xml file - it's been overwritten with sucessful replies. But here's the version taken from the LHC@Home pages which had the same problem before Christmas. I have added the lines so the beginning and end of the file are clear

File starts on next line------------------------------

Content-type: text/plain

[scheduler_reply]

[message priority="low"]Server can't open database[/message]

[request_delay>3600[/request_delay]

[project_is_down/]

[/scheduler_reply]

[scheduler_reply]

[project_name>LHC@home[/project_name]

[/scheduler_reply]

File ends on previous line--------------------------------

The problem appears to be the first line. The 'Content-type...' message shouldn't be included.

>>So far our system load averages about 5%. So we don't think so.

Hehe - Good luck!

Einstein@Home - 2005-02-26

)

Einstein@Home - 2005-02-26 12:59:46 - SCHEDULER_REPLY::parse(): bad first tag Content-type: text/plain

Einstein@Home - 2005-02-26 12:59:46 - Can't parse scheduler reply

This just started to happen to me. The progam was trying to return a WU that had just been finished.

John

Einstein@Home - 2005-02-26

)

Einstein@Home - 2005-02-26 19:59:17 - Sending request to scheduler: http://einstein.phys.uwm.edu/EinsteinAtHome_cgi/cgi

Einstein@Home - 2005-02-26 19:59:20 - Scheduler RPC to http://einstein.phys.uwm.edu/EinsteinAtHome_cgi/cgi succeeded

Einstein@Home - 2005-02-26 19:59:20 - SCHEDULER_REPLY::parse(): bad first tag Content-type: text/plain

Einstein@Home - 2005-02-26 19:59:20 - Can't parse scheduler reply

Einstein@Home - 2005-02-26 19:59:20 - Deferring communication with project for 1 minutes and 0 seconds

ALL GLORY TO THE HYPNOTOAD!

Do You Dare?

Potrebujete pomoc?

I have the same exact problem

)

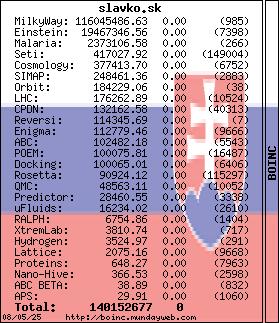

I have the same exact problem as slavko.sk on two of my machines. They are running Win 98 SE and Boinc 4.19 but never exibited this condition before the crash. I assume either you folks are working on the database server or something since I also have a no reply from scheduler on a 3rd machine from home with WinXP SP1 and Boinc 4.19. Both locations have clean Hi-Speed DSL lines, No proxy in use.

We had some problem with the

)

We had some problem with the scheduler as a result of the server crash.

The scheduler is now running again.